If you only drive occasionally, you might not be very picky about the car you use. But if you are a professional driver who spends every day on the road, you will certainly become a demanding user, expecting the car to be highly performant, comfortable, and reliable. Similarly, car manufacturers facing discerning professional drivers must spare no effort to perfect their vehicles, as any minor flaw could lead to customer loss.

Large language models (LLMs) possess powerful reasoning capabilities and the ability to generalize from limited information, fundamentally changing the way we process information and solve problems. However, general-purpose LLMs have already been monopolized by giants. For LLM system developers, the real value lies in more effectively and efficiently solving specific tasks in vertical domains.

Nevertheless, this is no easy task. The capabilities of large models and their unpredictability are like a high-performance but difficult-to-control car. Developers need to continually iterate and evaluate, maximizing the model's intelligence while ensuring the stability and predictability of its output, keeping variability within an acceptable range. This is akin to creating a car that is both high-performing and easy to handle. Only by doing so can they stand out in the fierce market competition and become the preferred choice of users.

Understanding LLM-based System Evaluation

When it comes to evaluation, many people first think of evaluating the LLM itself. However, evaluating an LLM and evaluating a system built on an LLM are completely different concepts. Evaluating an LLM focuses on the model’s performance and capabilities, like measuring a person’s IQ. On the other hand, evaluating a system or application built on an LLM emphasizes its performance in solving real-world problems in specific scenarios, much like assessing a professional's job performance in a particular field.

For LLM-based system or application developers, it’s usually unnecessary to spend too much effort on evaluating the general capabilities of the model. Instead, they can start with the most capable models and focus on how to leverage the model’s abilities to build workflows that solve tasks in vertical domains.

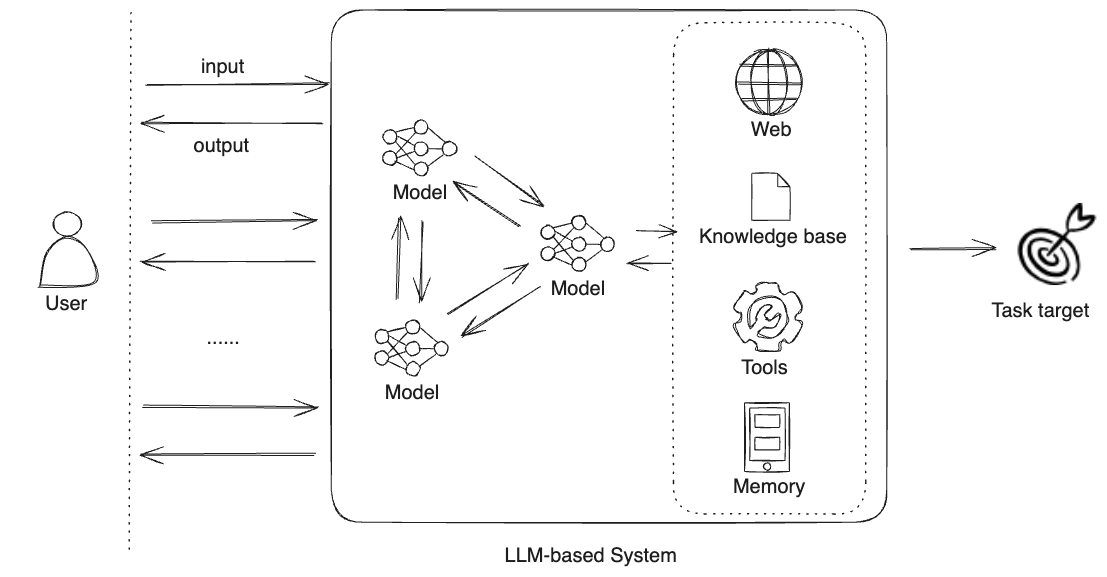

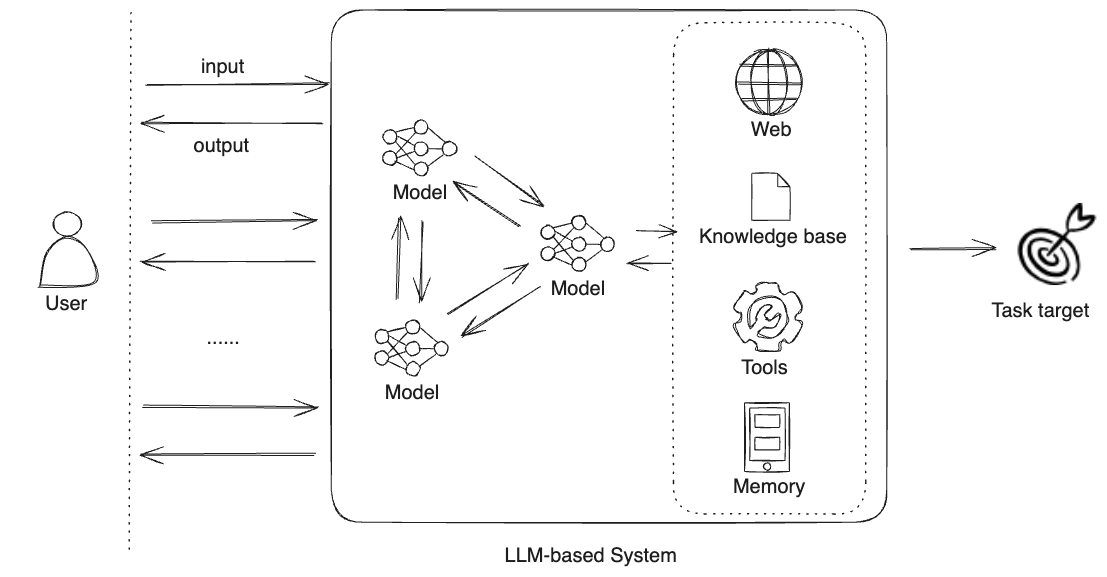

LLM systems are not merely simple Q&A systems but consist of multiple intertwined decision-making and execution steps. Previously, decision-making often relied on classification models trained through supervised learning. Now, large models can achieve this through tool-calling mechanisms. The execution steps can be handled by large models or through a combination of LLMs and external knowledge, known as Retrieval-Augmented Generation (RAG), or by external RPA workflows, with the LLM summarizing the results.

In this process, continuous optimization of prompts, models, and interaction processes is necessary. For complex systems, different models might be used at different stages, and also involves collaboration among multiple models. The greater challenge lies in building a system that combines intelligence with reliability.

This process is not as simple as stacking building blocks, it’s a challenging system engineering task. Constructing a well-performing LLM-based typically goes through four stages:

- Prototype Foundation: Conceptualize, rapidly build a prototype, and perform initial validation.

- End-to-End Evaluation: Set goals, determine evaluation criteria, prepare evaluation samples, run end-to-end evaluations, and analyze results.

- Component-wise Evaluation and Optimization: Identify issues based on evaluation on components and intermediate steps of the system, and optimize the system accordingly

- Real-world Validation: Deploy the system online once it meets the expected standards of quality and performance. This is not the end of optimization but a new beginning. Continuous monitoring, data collection, and repeated optimization are necessary after deployment.

Next, we will elaborate on the ideas and methods of validation and evaluation for these four stages respectively.

Step 1: Quickly Build Prototypes, Iterate and Validate

When you have any creative ideas, rapidly building a prototype is a crucial step in bringing those ideas to fruition.

As mentioned earlier, LLM systems are composed of a series of intertwined decision-making and execution steps. Each step involving LLM will involve selecting an appropriate base model, using customized prompts, and may involve knowledge retrieval or tool calls. The challenge is how to chain these steps together through workflows to achieve automated execution around target tasks.

There are many development tools that can help us quickly build prototypes, such as development frameworks like LangChain, or choosing OpenAI's Assistants API, as well as visual workflow orchestration platforms like Coze, Dify, Flowise and Langflow. These allow us to ignore some underlying technical details and focus more energy on business processes.

In the process of building system prototypes, we already need to conduct preliminary validation of the system. The goal is to ensure that the workflow can run normally and has a certain fault tolerance for unexpected situations, achieving baseline generation stability.

In traditional machine learning training, to obtain a usable system, we need to do a lot of data preparation. This includes steps such as data collection, cleaning, and labeling. However, because LLMs have already been pre-trained on massive diverse data, they possess powerful basic intelligence and excellent generalization abilities. This makes it possible for us to build prototypes through rapid iteration.

When the output effect of the initial system is not satisfactory, we can analyze the reasons for the problems, summarize common patterns, and optimize the prompts through some prompt engineering techniques (such as reflection techniques, chain-of-thought, few-shot prompting, etc.). We can then compare the new output results with the original ones, without needing to retrain like in traditional machine learning every time.

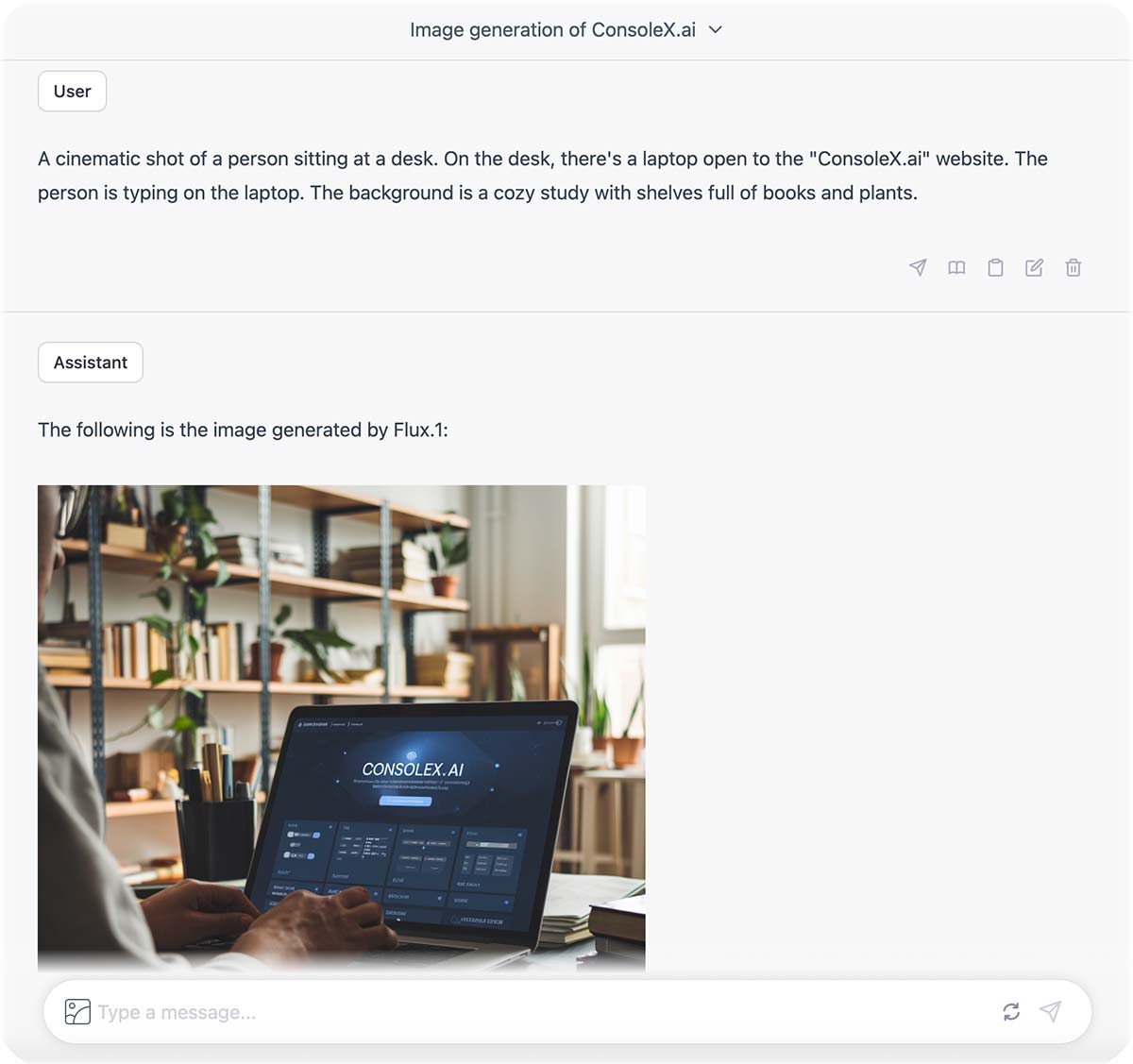

At this stage, the requirement for sample size is not high. We can use human-made test cases, use the Playgrounds provided by LLM providers and orchestration tools, or use ConsoleX.ai's one-stop workbench for testing, and leverage human expert experience for evaluation.

However, evaluation in Playgrounds can only cover specific use cases and cannot give us enough confidence that the system will generate stable responses. At this point, we should move on to the next stage and conduct more systematic evaluations based on larger-scale data.

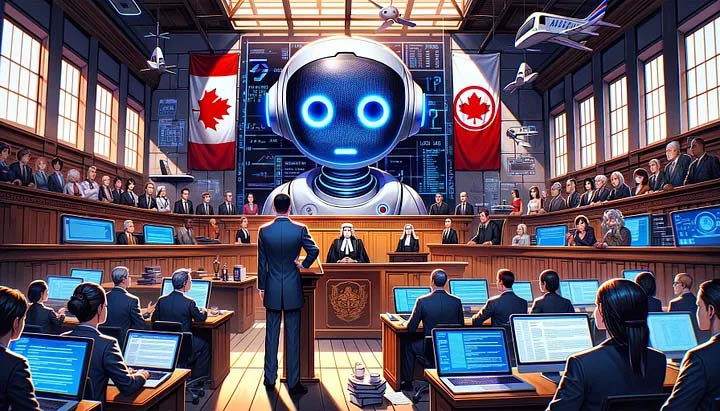

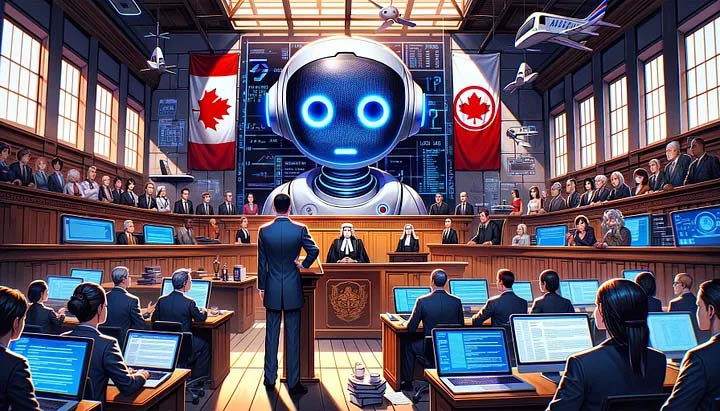

Unfortunately, many developers put time and energy into system building without thorough evaluation before bringing products to market. It's like driving a prototype car that hasn't passed safety inspections onto the road - the results can be serious or even disastrous. For example, Air Canada was forced to lose a lawsuit and refund and compensate customers due to its customer service chatbot giving out misleading promotional policies. In professional fields such as healthcare, law, and psychological counseling, using LLM-based systems to provide services obviously requires more caution, as any mistake could cost the company dearly.

Step2: Preparing for End-to-End Evaluation

So, how can we more systematically optimize LLM systems through evaluation?

First, you should be mentally prepared that after entering this new stage, you'll need to adopt some new methods, use professional evaluation tools and frameworks, and invest some time and effort in preparation work. This process won't be accomplished overnight, and repeated iterations may be needed before all indicators reach the benchmark targets.

However, if you want your LLM-based system to stand out from fierce competition or truly be competent for work tasks in vertical domains, rather than being busy dealing with various unexpected situations, such investment is necessary and worthwhile.

Specifically, before entering the end-to-end evaluation stage, you need to make the following three types of preparations:

- Establish evaluation metrics and benchmarks Based on business goals and experience from the previous stage, determine the dimensions to be assessed and quantify the goals to be achieved in each dimension as benchmarks.

- Determine evaluation methods, establish evaluators You can choose between manual evaluation or automatic evaluation. If it's automatic evaluation, you also need to build evaluators around evaluation metrics and quantitative standards to automate the evaluation process.

- Prepare datasets needed for running evaluations Spend time preparing domain-relevant evaluation datasets.

Determine Evaluation Metrics and Benchmarks

First, we need to determine the evaluation metrics and the final benchmarks to be achieved.

In traditional NLP tasks, commonly used evaluation metrics include accuracy, precision, recall, and F1 score. For tasks based on generative AI, determining evaluation metrics is usually more complex and diverse.

The setting of evaluation metrics is also related to your actual application scenario. For example, for medical applications, reference accuracy might be key, but for casual chatbots, contextual coherence is more important.

In practical application scenarios, most LLM systems need to be evaluated on multiple metrics, not just a single metric. For example, customer service chatbots need to be evaluated on dimensions such as answer accuracy, coherence, knowledge citation correctness, response time, and user satisfaction.

After determining the metrics to be evaluated, we need to set corresponding benchmarks. Setting benchmarks helps clarify optimization directions and provides references for system improvement.

Good benchmarks should be both measurable and achievable:

- Measurability: Use quantitative indicators or clear qualitative scales, rather than vague expressions.

- Achievability: Set targets based on industry benchmarks, prior experiments, artificial intelligence research, or expert knowledge, avoiding benchmark targets that are detached from reality.

Manual Eval vs. Auto Eval

Next, we need to determine the method of evaluation based on the metrics. We can choose between manual evaluation or automatic evaluation.

While manual eval can provide detailed feedback and understanding of complex issues, it is time-consuming and susceptible to subjective bias. In contrast, auto eval can quickly and consistently process large amounts of data, providing objective results, but requires more time and efforts in preparation.

Especially considering that evaluation needs to be based on large-scale data, and with the continuous evolution of LLM capabilities, the same evaluation may need to be repeated. Therefore, automating the evaluation process as much as possible becomes very important.

If auto eval is chosen, the next task is to establish evaluators around metrics and benchmarks. An evaluator is an automated program that performs the evaluation process according to one or more metrics and judges whether the eval results meet the benchmarks.

Based on the means by which evaluators complete tasks, they can be divided into algorithm-based, specialized model-based, and LLM-based evaluators. For scenarios with clear rules, we can adopt algorithm-based evaluators, such as judging the matching degree between texts, similarity based on specific algorithms, consistency of JSON data or schemas, etc. For classification tasks, we can use specialized models trained with traditional ML methods for evaluation, such as using pre-trained sentiment analysis models to evaluate the sentiment polarity (positive, negative, neutral) of user feedback. LLM-based evaluators can effectively evaluate in more complex and diverse generation scenarios, ensuring that the quality performance and user experience of LLM systems exceed expected benchmarks.

Around the same evaluation metric, multiple different types of evaluators can be used for evaluation. For example, for text similarity, both embedding distance algorithms and LLM can be used for evaluation.

LLM as a Judge

Why can LLMs excel in the role of evaluators?

First, there may be differences in intelligence levels between models used for generation and those used for evaluation. Due to practical factors such as cost, privacy, device limitations, or latency, we don't need to (or can not) use the most capable models in all scenarios. However, to pursue the accuracy of evaluation results, we usually need to apply the most capable models to evaluation.

Moreover, the focus of generation and evaluation processes is different. For example, in content creation scenarios, generative models need to possess high creativity and flexibility, capable of generating diverse and creative content, like writers considering various possibilities during creation. Evaluation, on the other hand, requiring models to strictly review and score text under specific standards, like editors needing to evaluate manuscript quality based on established criteria. Generative models focus on diversity and creativity, while evaluation models focus on accuracy and consistency.

Depending on whether ideal answers are provided, evaluators can be divided into two categories: with reference and without reference. Evaluators with reference need to prepare reference answers before evaluation begins, which can be one or more options to be chosen, or a perfect answer or problem-solving thought process. If no reference answer is set, then the prompt needs to guide the model to make judgments based on its own knowledge and reasoning ability.

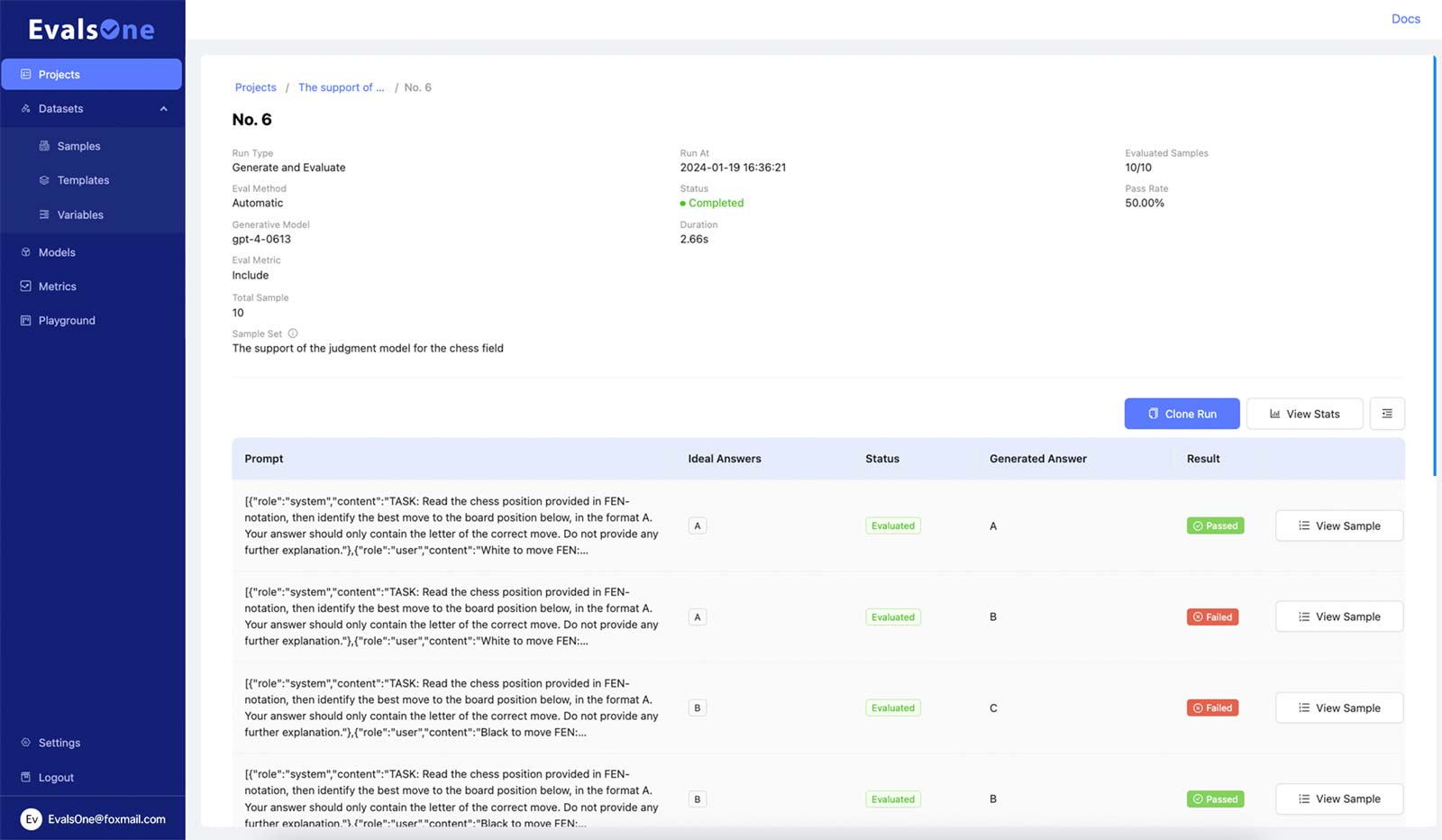

Using LLMs as judges for evaluation is essentially a generation task relying on evaluation prompt templates. Therefore, using high-level AI models and adopting well-tested prompts is crucial for the accuracy of eval results. For more personalized scenarios, general evaluation prompts may not be fully applicable. In this case, it's necessary to customize evaluators that meet specific needs. EvalsOne provides a method to create custom evaluators through YAML configuration files.

Finally, we also need to be aware of the limitations of LLMs as Judges since it is essentially based on LLMs and the eval prompts, and is similarly limited by the quality of prompts and the basic capabilities of the large models used. Moreover, LLMs as Judges still have a certain probability of making mistakes, and prompts used for evaluation still need to be evaluated and verified.

However, this doesn't mean we should abandon LLMs due to these challenges. On the contrary, from the overall perspective of cost and benefit, LLMs as judges has significant advantages in many aspects. With continuous technological progress and improvement, these issues can be gradually resolved. By combining the advantages of manual and auto evals, we can establish a more efficient, accurate, and comprehensive evaluation system.

Preparing Eval Datasets

Next, we need to prepare larger evaluation datasets, also called Test Cases. Rather than preparing massive evaluation datasets at once, we can consider adopting a progressive approach. First, expand the sample set to a larger scale, run the evaluation, and if the results meet the standards, then expand to an even larger sample set, increasing the diversity of the data and introducing challenging edge cases. If the evaluation on the expanded sample set doesn't meet the standards, then we need to identify problems and optimize the system.

For a long time, preparing evaluation sample sets has been primarily done manually, which is both time-consuming and tedious. With the continuous advancement of large language model capabilities, using LLMs to expand evaluation datasets has become possible. More and more research and solutions are emerging in this field. For example, Claude's Workbench integrates the function of intelligently generating variables, which can automatically generate diverse test cases.

However, using large models to expand evaluation sample sets also faces some challenges. A major problem is the tendency for data homogenization, leading to a lack of diversity in test cases. Additionally, large language models sometimes produce hallucinations, generating incorrect results. Therefore, at the current stage, manual review of AI-generated sample data remains an indispensable part of the process. Manual review not only ensures the accuracy and diversity of data but also helps identify and correct errors that occur during model generation.

By combining human and AI power, we can more efficiently generate diverse test cases, ensuring that the system performs excellently in various situations.

Step 3: Component-wise Evaluation and Optimization

Once the metrics and benchmarks are determined, and automated evaluators are established, we can start running end-to-end evaluations to judge the overall quality and performance of the current system.

Next, collect result data and judge the gap between the evaluation results and the expected benchmarks. If the expected goals are achieved, we can expand the dataset and increase the challenge of the data. But if the expected goals are not met, we need to analyze the reasons from the process and optimize the system.

This is again a difficult process that often gives people a feeling of not knowing where to start. Is it a problem with the model itself, or with the prompts, or with the RAG data retrieval process, or with the design of the Agent workflow?

At this point, we need to break down the whole system into specific sub-steps and components and put them under the microscope for evaluation. This requires us to have a further understanding of the working mechanism of the LLMs and the systems.

For example, for a RAG pipeline, it can be specifically divided into retrieval process and generation process. The slicing method, embedding algorithm, retrieval method, and whether the LLM generation faithfully utilizes the retrieved contexts will all affect the final generation result. At this point, we need to deconstruct the process, trace what exactly happened behind each step, which is a bit like debugging in software development. Some third-party tools provide such tracing functionality, such as LangChain, Langfuse, etc., allowing us to have a better grasp of process details and adjust and optimize system parameters.

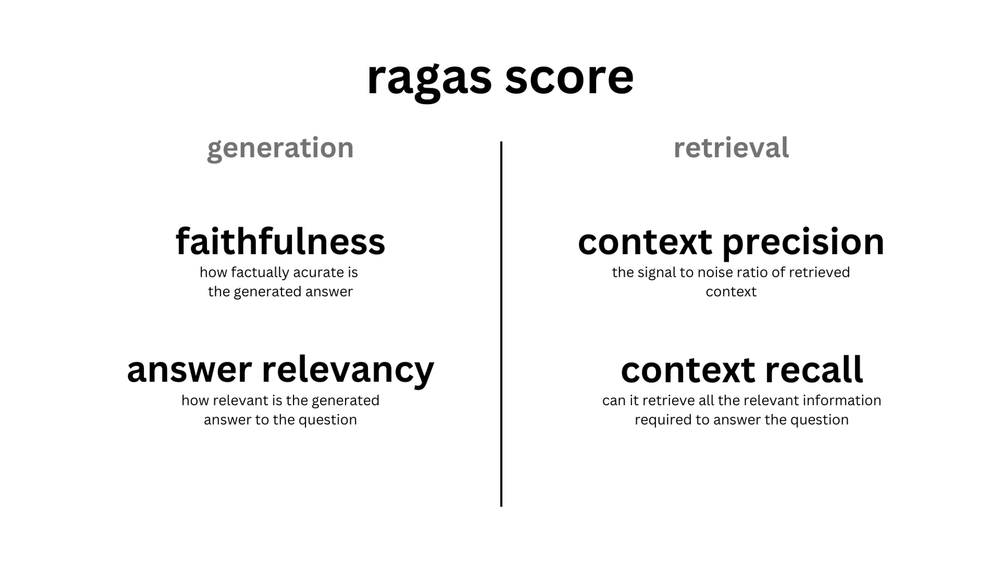

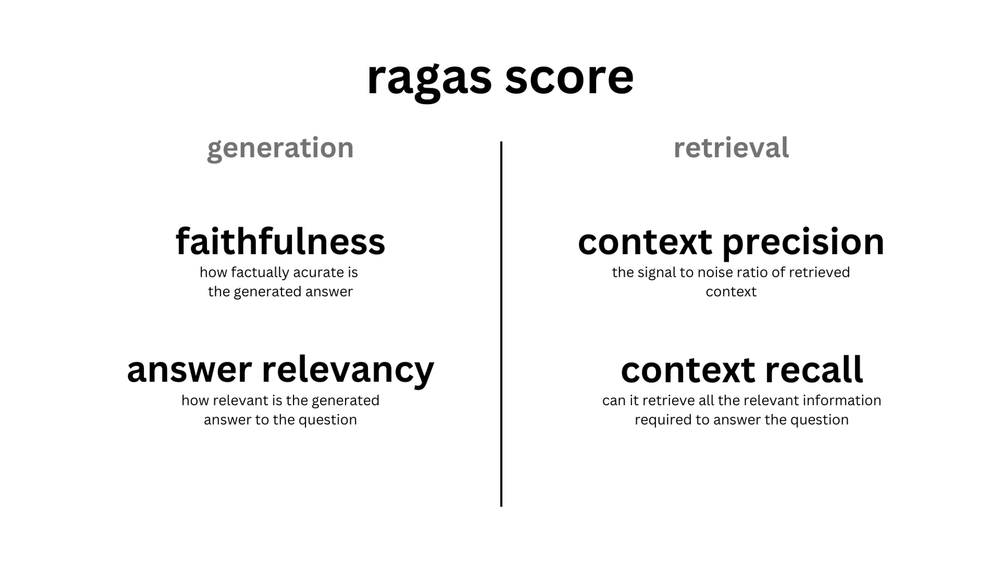

However, Tracing is based on single or few samples. For batch data, more professional evaluation tools like EvalsOne and systematic evaluation methods are needed. In the process of component-wise evaluation, the eval metrics, the compoments involved and benchmarks needed are different from end-to-end evaluation. Still taking the evaluation of RAG pipeline as an example, Ragas proposed four evaluation metric dimensions for RAG evaluation: answer relevance, context precision, context recall, and faithfulness, providing a practical approach for improving the overall effect of the RAG pipeline through evaluation.

Evaluation is also extremely important for AI Agents. A complex AI Agent needs to use prompt chains, needs to have the ability to make decisions and utilize external tools, needs to combine vertical domain workflow RPA, and needs to use the results of the workflow for secondary generation. Projects like AutoGPT have shown us the potential of Agents, but also exposed their limitations. Every step of decision-making during Agent execution may lead to deviation from the goal, which requires the Agent to have the ability of self-reflection and error correction, and also requires evaluation of the execution effect of each step.

The evaluation of Agents is still a challenging and exploratory field. Some have proposed the concept of Guardrail for Agent evaluation, correcting (e.g., regenerating) in a timely manner by evaluating the effect of intermediate steps in real-time. However, the "Guardrail method" can only prevent the Agent's execution from deviating from the track as much as possible, and cannot guarantee that the Agent can complete the established goals well. Moreover, repeated error correction may bring very high additional delays and costs.

When process-based analysis and evaluation help us find the reasons and make targeted optimizations, we still need to re-run end-to-end evaluations to ensure that the operational effect of the LLM system has indeed improved. We may need to repeat this process from whole to compoments and back to whole multiple times until the expected benchmarks are finally reached.

Sometimes, we may find that system optimization may be limited by the prototype building tools used. For example, visual orchestration tools like Coze, Dify, Langflow, while lowering the barries for Agent building, also have limited tuning capabilities. In practice, we can first use some visual orchestration tools to quickly build prototypes, evaluate the effects, and try to tune. But if we are always unable to reach the expected benchmark due to the limitations of the framework, we should consider using a more underlying way to reconstruct the system, so that we can have better controllability over the intermediate processes and parameters of the LLM-based system.

Step 4: Real-world Validation and Monitoring

"It's easy to make something cool with LLMs, but very hard to make something production-ready with them"

——Chip Huyen

The more thorough the previous evaluations, the more confidence we will have in the performance of the LLM-based system in the real world. However, due to the characteristics of the production environment, we should maintain stronger vigilance against errors, so evaluation and monitoring in the production environment are often inseparable.

When LLM systems provide services in a production environment, it's impossible to fully evaluate each output before presenting it to end users, as this would increase system latency and affect user experience. However, we can still collect real-world data through APIs for post-hoc evaluation, and strengthen alerting mechanisms to make agile responses and necessary handling for abnormal situations.

At the same time, it's necessary to periodically review and check the output quality. Real-world situations can always give us some inspiration for improving the system. From development to testing, and then to the production environment, it's a process of continuously generalizing sample data, pursuing excellence through continuous improvement and integration (CI/CD), making the system more robust.

However, this doesn't mean our goal is to make the system achieve 99.999% accuracy. The intelligence and generalization ability of generative AI inevitably comes with unpredictability. From a business perspective, what we need to do is reduce the probability of system errors to below the tolerance limit, while achieving cost reduction and efficiency improvement, thereby maximizing business benefits.

Finally, let's talk about the tools needed for LLM-based system evaluation. If you're a tech expert, there are many open-source evaluation frameworks to choose from, such as OpenAI's EVALS, Promptfoo, Ragas etc. LangChain and LlamaIndex also include evaluation components. Their advantages are that they are open-source, transparent, and free, and you don't have to worry too much about data privacy issues.

However, open-source evaluation tools often provide more technical-level solutions, requiring users to have a certain development background, and are more difficult for roles outside of team development to get started with. Moreover, they provide only evalation tools instead of complete solutions. If you want to achieve automation of the evaluation process, including data collection, preparation, iterative evaluation process management, and monitoring in the production environment, secondary development is required.

Many LLM providers and hosting platforms also provide evaluation functions, but they often only support their own models and have limited functionality. Comparatively, SaaS platforms like EvalsOne have the advantages of more friendly interfaces and usage processes, broader model support, can lower the threshold for introducing evaluation processes in teams, and can provide a complete set of evaluation solutions from development environment to production environment. This allows developers to focus more energy on system optimization, rather than reinventing the wheel on evaluation systems or having to deal with sample data all day.

Of course, what is a wise decision depends on the specific situation of each team and scenario. For example, in scenarios where data privacy is extremely important, adopting an open-source solution may still be the preferred choice. For business-priority scenarios, the intuitive interface and comprehensive solutions of SaaS systems can allow team members of various roles to smoothly participate in the improvement process of LLM-based systems.

Takeaway

Finally, let's summarize the key points of this article:

- LLM-based system evaluation is different from LLM evaluation, focusing more on the specific performance of the system in solving practical problems in vertical scenarios, which is crucial for building reliable, high-performance AI applications.

- Building an outstanding LLM system through evaluation usually goes through four stages: prototype building stage, end-to-end evaluation stage, component-wise evaluation and optimization stage, and real world validation stage. Testing and evaluation should run through the entire process.

- From prototype building to end-to-end evaluation, some preparations are needed first: establishing evaluation metrics and benchmarks, determining evaluation methods and creating evaluators, and preparing eval datasets.

- Using LLM as an judge is an effective method. Introducing auto eval can improve efficiency and effectiveness compared to manual eval, but it's also necessary to accept the uncertainty that comes with it.

- Optimizing LLM-based systems through iterative evaluation is a cyclical process from whole to components and back to whole, needing to be repeated until the benchmark is reached.

- Evaluation in the production environment is inseparable from monitoring, requiring the establishment of alerting mechanisms and continuous improvement driven by results.

- When choosing evaluation solutions, it's necessary to weigh the pros and cons of open-source tools and SaaS platforms, and make decisions based on team situations and application scenarios.

Linking the previous steps together, we can get an overall roadmap for creating excellent LLM-based systems through iterative evaluation:

Although there are already many popular tools and solutions for LLM-based system evaluation, due to the rapid development of generative AI and the complexity of the evaluation process itself, teams that truly incorporate evaluation smoothly into their workflows still only account for a small proportion. Our goal in developing EvalsOne is to make the evaluation process simpler, more intuitive, easy to get started with, while being comprehensive in functionality and cost-controllable.

Later, we will deliver follow-up articles on preparing datasets, establishing metrics and evaluators, and the methods for optimizing various LLM systems through evaluations, enabling teams to fully master evaluation tactics to create more LLM systems with excellent quality and performance.